Reinforcement Learning by Human Feedback (RLHF)

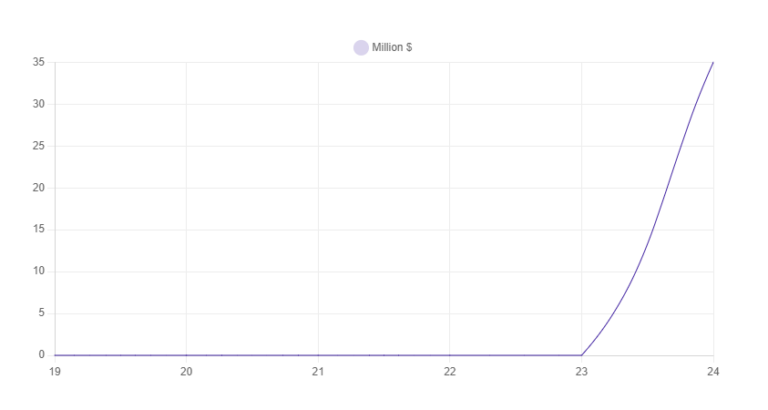

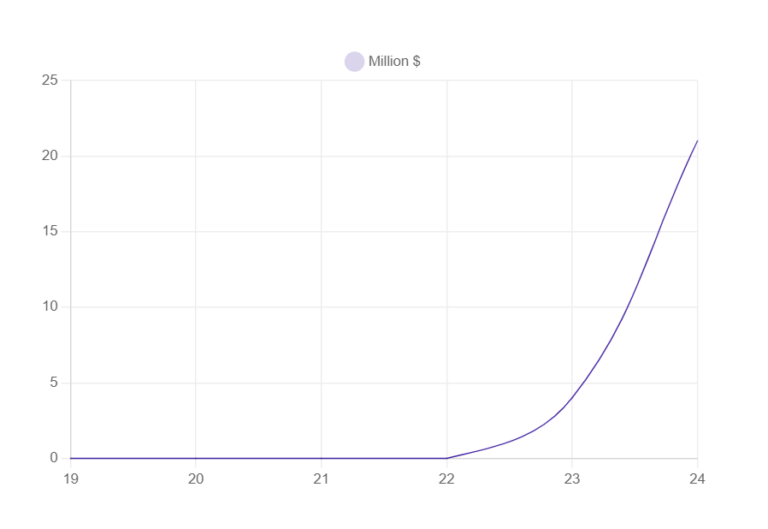

Trend

RLHF is a process that involves using human responses to check and train AI models, helping to reduce inaccuracies and errors in AI systems. This trend highlights the importance of combining human intelligence with AI technology to enhance performance.

Trendsetters

Prolific’s platform addresses the need for human feedback and validation to improve the accuracy and performance of AI models.